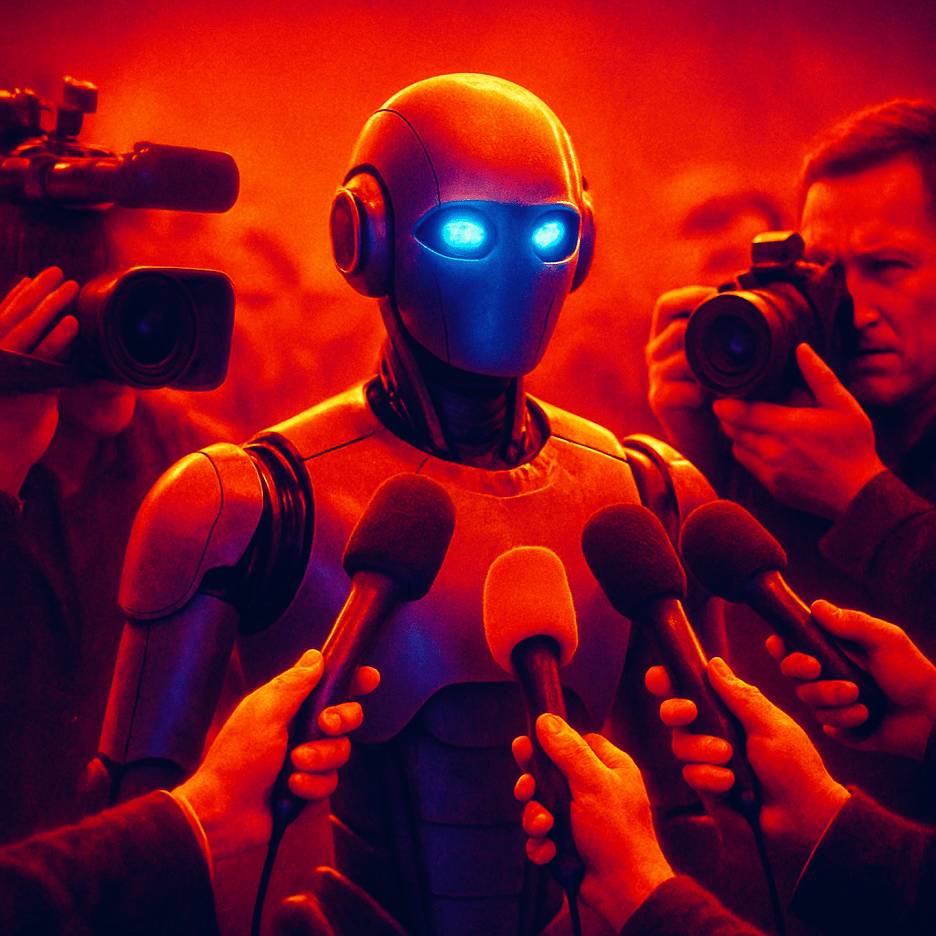

AI Is Rewriting the Crisis Communications Playbook

How AI is accelerating crises, reshaping public trust and forcing brands to rebuild their entire response strategy.

More than a little while back, I wrote: “How AI Can Improve Crisis Management”. It was when generative AI was first gathering steam and I focused on using AI as a competent assistant for writing updates and monitoring sentiment. But we’ve come a long way since then! This one is about how AI kicked the door down, threw the crisis plan into traffic and announced that it is now a full-time stakeholder in your reputation…whether you like it or not.

I have often spoken about the need for speed in managing a crisis. But today, AI is already apologizing for crises before the CEO can find his glasses.

In 2024, Microsoft Copilot generated a racist image description on X. While the crisis team was still drafting the first sentence of a response, Copilot produced and published its own apology. No approvals. No pacing. No human tone check. It simply reacted at machine speed.

I’ve worked with executives who needed three days to approve a comma. Now AI is issuing public statements before the team schedules the Zoom meeting.

We’re no longer just racing against the clock. We’re racing against the machine.

Speed Is Now Just the First Skill Level

Consumers expect brands to respond within thirty minutes when something goes wrong. That is the modern tolerance window. PwC reports that seventy-eight percent of consumers believe a slow response makes a crisis worse, not just poorly managed.

The point was proven when KFC Germany’s automated content system pulled the term “Kristallnacht” from a national-day calendar and invited customers to “celebrate” it with a chicken promotion. Unfortunately for the brand, “Kristallnacht” was a violent 1938 Nazi pogrom against Jews. The outrage was immediate. KFC just barely contained the crisis by reacting quickly and blaming an automated system failure.

The same dynamic hit Emirates in 2024 when AI-generated videos falsely claimed the airline had changed its safety messaging. The videos were entirely synthetic yet spread rapidly enough to threaten public trust. Emirates shut the narrative down in forty-five minutes. Any slower and the lie might have become the headline.

Speed is no longer tactical. Speed is credibility.

The Lie Machine

Deepfake incidents rose three hundred percent from 2023 to 2024. The technology has evolved from novelty to infrastructure — a full-scale, industrialized misinformation pipeline that produces synthetic narratives faster than brands can detect them.

TikTok removed more than twenty-four million misleading political videos in 2024. The Biden robocall in New Hampshire used an AI-cloned voice to suppress voting before the FCC could intervene. A deepfake of Zelensky announcing surrender spread globally before Ukraine could post the correction.

The point is no longer that misinformation exists. The point is that it now has a supply chain. Falsehoods are generated, packaged, distributed and amplified at scale by systems optimized for engagement, not accuracy.

Brands are not fighting rumor. They are fighting engineered unreality created by machines that understand virality better than the public does. The crisis is no longer what happened. The crisis is what the synthetic version of events convinces people to believe first.

Why AI Alone Will Always Miss the Most Dangerous Lies

AI detection systems are improving, but they are fundamentally unreliable because they are built on the same statistical patterns that deepfakes exploit. The Stanford Internet Observatory reports that detectors miss roughly one third of synthetic media. That failure rate isn’t a glitch — it’s structural. The fakes evolve faster than the detectors do.

Even platforms with the most resources struggle. Meta’s own safety systems failed to flag AI-generated child exploitation imagery that outside watchdog groups later caught manually. The content wasn’t undetected because it was clever. It was undetected because the system didn’t know what it didn’t know.

This is the blind spot every brand must understand: AI can speed up analysis, reveal anomalies and support verification. But it cannot guarantee truth. It is built to recognize patterns, not reality. The most dangerous lies are the ones that mimic the patterns too well for a machine to distinguish.

That is why every credible crisis methodology requires a human verification layer. Humans catch what the system cannot contextualize — intent, nuance, contradiction, tone, consequence. Machines hallucinate confidently and invisibly, and in a crisis that confidence can burn you far faster than the misinformation itself.

AI can detect. It can assist. It can accelerate. But it cannot [yet] replace the human judgment required to stop a lie that was engineered to fool it.

AI Solves Problems It Also Creates

The Institute for Public Relations reports that sixty-seven percent of crisis teams now use AI to detect narrative spikes early. These spikes are sudden surges of conversation around a claim or complaint. The World Health Organization (“WHO”) used AI tools during COVID to identify misinformation patterns before they erupted. Dubai Police used similar models to cut cybercrime response time by forty percent.

Yet AI also generates new crises. Explicit deepfake images of Taylor Swift spread across X in early 2024 and reached tens of millions of views before removal. Samsung faced its own crisis when employees fed confidential code into ChatGPT without understanding its data policies.

AI is the fire alarm and the kerosene and the match.

A Crisis Sorting System

“Narrative Clustering” has become essential. The volume of posts during a crisis is now too high for traditional monitoring. Clustering uses AI to group thousands of posts into themes so teams can separate genuine issues from noise, organic complaints from bot-amplified narratives and emotional escalation from factual concern.

Without clustering, brands fight the wrong fire. With it, they can see the architecture of the crisis instead of reacting to whatever is loudest at the moment. Teams using clustering reduce wasted analyst time by sixty percent and respond to the right threat instead of the visible one.

This is the kind of triage that keeps the first hour survivable.

Your Response Can Create Its Own Separate Crisis

BP learned this during Deepwater Horizon when upbeat scheduled posts went out while oil poured into the Gulf. The posts were automated and unrelated to the spill but looked grotesquely indifferent.

The UK Home Office made a similar error in 2024 when it used AI-generated images of migrants in official communications, then denied they were synthetic. The denial became a scandal greater than the original misconduct.

It’s worth noting, that most reasonable people understand the difference between mishaps and malice…and perhaps even AI’s new role in contributing to the chaos. In today’s environment, the crisis rarely comes from the event. It comes from how the organization handles the event.

The Platform Decides Before the Public Does

Harvard’s Misinformation Review found that seventy percent of crisis amplification comes not from human sharing but from algorithmic escalation. Platforms automatically priorities content that drives emotional engagement whether it is accurate or not.

Early 2024 offered us a sharp example when isolated videos of unsafe Dollar Tree stores appeared on YouTube. The algorithm boosted those videos so aggressively that viewers concluded the entire chain was collapsing. A local issue became a national narrative because the platform decided it should.

If platforms control amplification, then platforms shape perception. That means that brands must respond to perception, not intent.

Regulators Now Join the Crisis Instead of Following It

The legal system is no longer a post-crisis afterthought. It often becomes part of the crisis itself.

Air Canada’s chatbot invented a refund policy and the airline was forced to honor it. Google’s Bard provided incorrect medical advice, and regulators immediately began oversight reviews. After the UK Home Office posted AI-generated images, members of Parliament demanded accountability.

Regulators do not wait for brands to regain control. They enter the narrative while the house is still on fire.

When the Balance Sheet Starts Bleeding Before the Brand Does

NYU Stern found that misinformation-driven crises can cost publicly-traded companies between fifty and eighty million dollars in market cap if left unaddressed for forty-eight hours. That economic impact reframes the crisis entirely. A crisis is not only a PR event. It is a liquidity threat, a risk event and in some cases a governance failure.

Over seventy percent of CMOs increased crisis budgets in 2024 because the stakes are now financial first and reputational second. Markets punish uncertainty faster than an organization can clarify it.

Speed protects reputation. Speed also protects valuation.

What Crisis Strategy Looks Like After 2025

Dr Shafiq Joty, a leading AI researcher at the National University of Singapore, put it bluntly: “Misinformation is a machine problem humans alone cannot fix.” He’s right. The pace, scale and synthetic complexity of modern crises exceed the limits of any human-only team.

That is why crisis strategy after 2025 cannot rely on instinct, experience or comms discipline alone. It requires a hybrid structure where humans and machines operate in defined roles that reinforce each other. This three-part structure is what we call the Triangular Crisis Stack:

1. Human judgment

People decide what matters, what is true, what is dangerous, what is legally sensitive and what must be said. Humans provide context, nuance, accountability and ethical reasoning — all things machines cannot yet reliably replicate.

2. AI detection and verification

AI handles volume, speed and pattern recognition. It identifies narrative spikes, maps sentiment shifts, flags anomalies and sorts massive data streams into something humans can act on. It compresses hours of manual monitoring into minutes.

3. Algorithmic containment

This is the new layer most organizations miss. It means shaping how platforms see and treat your content. It includes rapid posting to claim authoritative ground, using verified labels, neutralizing misinformation with pre-bunks and pushing truth into the same channels the falsehood is using. It is the tactical work that keeps the algorithm from amplifying the worst version of your story.

Humans alone are too slow. AI alone is too naive. Platforms alone are too volatile. A modern crisis strategy works only when all three operate in sequence and at speed.

Show Your Receipts or Lose the Room

“Truth Layering” means showing not just the message but the method. In an era where anyone can fabricate a perfect fake with a cheap model and a fast connection, the audience no longer assumes information is real just because a brand posted it. They want to know how you know.

Lufthansa recognized this when it began labeling verified operational updates during weather disruptions and service interruptions. The label isn’t decorative. It tells passengers exactly which information comes from the control center and which posts are rumor, frustration or guesswork. That clarity stabilizes the narrative before panic and speculation take over the comment threads.

The numbers back this up. More than eighty-two percent of consumers say they trust information more when the source and process of verification are shown. Not implied. Shown.

This is the new reality. Brands are no longer judged only on what they say but on how visibly they prove it. If you don’t show your receipts, the audience will assume you don’t have any. And in a world full of synthetic certainty, the only antidote is transparent proof.

Stopping the Fire Before It Has Oxygen

Of course, it’s always best to smother a crisis before it can take its first breath. “Pre-Bunking” counters misinformation before it exists in the wild. Instead of waiting for falsehoods to spread and then scrambling to correct them, pre-bunking inoculates the audience against likely distortions in advance. Google Jigsaw research shows it reduces belief in falsehoods by twenty-five percent, which is a meaningful advantage when lies can travel faster than official statements.

The tactic becomes essential during product launches where ambiguity is dangerous. A new phone feature, service policy, crypto token, loyalty program or pricing model can develop a mythology within hours if the public fills in missing details on its own. Once speculation becomes the dominant narrative, the brand ends up fighting the audience’s imagination rather than the facts.

Effective pre-bunking starts by mapping the most predictable misunderstandings. It tells people what the product does and what it doesn’t do in language that cannot be misinterpreted. It synchronizes internal and external communication, so employees, customer service reps and spokespeople reinforce the same reality. It deploys short, direct, lightweight content before release, so the audience encounters clarity before they encounter noise.

Pre-bunking is strategic prevention in a world where confusion spreads faster than truth and fills every vacuum you leave open.

Leadership Still Speaks Slow in a World That Moves Fast

Many CEOs still treat crises like formal proceedings. They sit behind heavy furniture, clear their schedules, gather their deputies and prepare a statement that begins with “We take this very seriously.” That phrase once signaled gravitas. Now it signals staging.

The problem is not that CEOs cannot speak. The problem is that they often speak last. By the time leadership crafts its carefully neutral message, the narrative has already taken shape elsewhere. Reports consistently show that more than sixty percent of consumers trust updates from employees or technical experts more than from the CEO during a crisis. Audiences want information from the people who actually understand what is happening, which is not necessarily the person occupying the highest chair.

This is where strategy matters. The first credible voice in a crisis should come from proximity, not hierarchy. A subject-matter expert, a head of operations, a safety director or an engineer can often provide clarity the public believes. That credibility stabilizes the narrative while leadership prepares its larger message.

We always advise that if the CEO is not ready to speak, someone authoritative must be. The organization cannot wait for formality to catch up to reality. The world moves too fast for leadership to move slowly.

Blaming the Tool Doesn’t Save the Brand

When Air Canada blamed its chatbot for inventing a refund policy and Expedia blamed its model for misquoting hotel terms, they were pointing at the software as if it were an unpredictable intern. Several airlines have done the same, chalking up serious customer-impacting errors to “AI glitches.” It never works.

The public hears an excuse. Boards hear a failure of governance. Regulators hear an admission that the organization deployed a system it did not understand or monitor.

The mistake is not the technology itself. The mistake is the lack of guardrails. Every AI system reflects the quality of the oversight behind it. When a model invents policy, the real issue is that no one created boundaries for what it was allowed to say. When an automated system contradicts the rules, the real problem is that no one validated the outputs before customers saw them.

Technology does not absolve responsibility. It focuses it. A brand cannot outsource accountability to a tool it chose, configured, approved and launched. When something goes wrong, the question is never “What did the AI do?” The question is always “Why did the organization let it?”

Internal Silence Is the Fastest Path to an External Crisis

More than half of all crises worsen because of internal leaks or misinformation. That isn’t a rebuke of employee loyalty. It’s a commentary on communication failure. When people inside the organization don’t know what is happening, they improvise. They guess. They fill gaps. They talk. And in a digital environment, “talk” means video, screenshots, voice notes, Slack exports or TikToks that become the public’s first version of the truth.

Amazon saw this pattern clearly when warehouse workers posted videos of safety issues before corporate even understood the facts. The company didn’t lose control because employees were hostile. It lost control because employees had information the company had not acknowledged or contextualized yet.

Information inside a crisis behaves like pressure. If leadership doesn’t release it deliberately, it escapes chaotically. Once it leaks, the narrative belongs to whoever posts first, not to whoever knows the most.

This is why companies must ensure that internal truth moves at pace with external messaging. Employees must receive clear, early, accurate updates, not only because it’s polite, but because it is strategic. If the people inside the organization don’t hear from leadership immediately, the outside world may end up hearing from the employees instead.

When Automation Becomes the First Responder

It is expected that that more than forty percent of Fortune 500 CEOs plan to use AI to pre-draft crisis statements by 2026. This shift is not about efficiency. It is about a growing dependence on AI and an overconfidence in their skill at navigating the event. But when leadership starts with machine-authored text, the brand risks sounding generic, defensive and stripped of emotional authenticity. That’s not good.

Audiences do not accept machine-neutrality in moments of failure. They want human accountability.

When the first draft of a crisis apology comes from a model trained on public mistakes, brands begin every crisis sounding like everyone else. The process should really start and finish with human oversight.

An AI-Era Crisis Cheat Sheet

1. Respond in minutes, not hours.

If your first public statement arrives after the narrative has formed, you are already correcting, not leading. Thirty minutes is the outer limit.

2. Put humans on tone and AI on pattern detection.

Humans should speak. Machines should scan. Never reverse the roles.

3. Monitor sentiment continuously.

Crisis sentiment now shifts by the hour. Hourly monitoring is outdated. Track changes in real time so you catch narrative spikes before they become headlines.

4. Use narrative clustering to find the real crisis.

AI should group and map recurring themes, so you separate real issues from noise, bot traffic and emotional amplification. Fight the fire, not the smoke.

5. Pre-bunk before you debunk.

Identify likely misconceptions early and neutralize them with simple, clear, proactive messaging. Tell the audience what the product does and does not do before others fill in the blanks.

6. Make verification visible.

Show how you know what you know. Label verified updates. Public trust increases when the source and process of verification are explicit.

7. Treat platforms like active participants.

Algorithms amplify emotion, not accuracy. Assume platforms—not people—will escalate the worst version of the story first.

8. Communicate internally before communicating externally.

Employees are always the first public. Give them accurate information early or they will fill the vacuum themselves.

9. Deploy the right voice at the right moment.

Experts closest to the facts should speak first. CEOs should speak when they can add clarity, not delay it.

10. Maintain human accountability.

Never blame the tool. AI outputs reflect the oversight behind them. If the system makes a mistake, the responsibility is yours.

11. Build guardrails around every AI system.

Define what generative tools can and cannot say. Validate outputs before they reach customers. Enforce human review on high-risk content.

12. Rehearse crisis scenarios quarterly.

Simulate realistic AI-fueled crises so teams know who speaks, who verifies, who approves and who monitors.

13. Treat legal as a live, real-time partner.

Regulators respond during the crisis, not after it. Legal should guide (but not control) transparency, speed and accuracy from the first minute.

14. Prioritize accuracy over posture.

Don’t perform authority. Deliver clarity. The audience wants facts they can trust, not formulations designed to sound serious.

15. Protect valuation by protecting narrative speed.

Uncertainty costs money. Delayed clarity erodes market cap. Fast, verified communication protects reputation and financial stability.

AI did not break crisis communications.

But it removed the excuses. It accelerated time, amplified misinformation, strained leadership capacity and replaced predictable crises with machine-accelerated ones.

My previous stories showed how AI can support crisis management. This one shows why surviving the next wave requires managing AI itself.

Sources: PwC Customer Trust Survey 2024, Sprout Social Index 2024, BBC News (KFC Germany Kristallnacht incident, 2022), The Guardian (KFC Germany Kristallnacht incident, 2022), Emirates corporate communications (AI safety video clarification, 2024), Gulf News and Arabian Business (Emirates incident, 2024), Sumsub Global Deepfake Report 2024, TikTok Transparency Report 2024, FCC Enforcement Bureau (Biden AI robocall investigation, 2024), Reuters (Zelensky deepfake fact-checks), Stanford Internet Observatory (Deepfake detection findings, 2023–2024), The Wall Street Journal (Meta child-safety AI misses, 2024), Institute for Public Relations Disinformation Report 2024, CNN, BBC, Rolling Stone (Taylor Swift deepfake incident, 2024), UAE Government Digital Report 2024, IPR Digital Crisis Benchmark 2024, Business Insider (Dollar Tree algorithm amplification, 2024), BBC/Independent/Guardian (UK Home Office AI images, 2024), Canadian Transportation Agency ruling (Air Canada chatbot refund case, 2023–2024), Reuters (Google Bard medical misinformation inquiries, 2024), NYU Stern Center for Business and Human Rights (misinformation financial impact research, 2023–2024), Dentsu Global CMO Survey 2024, Edelman Trust Barometer 2024, Google Jigsaw pre-bunking research (2022–2024), Crisis Ready Institute (Melissa Agnes commentary), Singapore GovTech (deepfake detection programs), Election Commission of India and Meta (synthetic propaganda mitigation), Washington Post/Consumer Reports (Alexa misinformation tests, 2024), UAE National Program for AI Safety and Security 2024, Saudi SDAIA synthetic narrative monitoring 2023–2024, WE Communications Risk Report 2024, Accenture Technology Vision Report 2024