Mickey Mouse vs AI: What Brands Still Control

Today, consumers discover products in fragments and waves…not based on some date in your marketing calendar. Modern launches succeed by building momentum over chapters that unfold before, during and after the moment you think is the reveal.

I just learned that Disney entered into a $1 billion strategic investment and commercial partnership with OpenAI, paired with a multi-year licensing agreement that allows OpenAI’s tools, including Sora, to generate content using Disney-owned characters within clearly defined contractual boundaries. That stopped me cold. Not because Disney is experimenting with AI—that part was inevitable—but because of how they’re doing it.

The agreement gives OpenAI sanctioned access to more than 200 Disney characters while retaining approval rights, usage constraints and governance over how those characters appear inside OpenAI’s systems. Mickey Mouse can now be summoned with a prompt—but only inside a framework Disney negotiated and controls. They’re opening the door while remaining squarely in the doorway.

Shutterstock followed a similar logic by licensing its stock photo and video library directly to OpenAI rather than fighting from the sidelines. All the while, companies like Universal Music Group have taken the opposite posture, aggressively blocking unauthorized AI voice models of its artists across platforms. Warner Bros appears to still be on the fence—publicly explored AI-assisted storytelling tools while stopping short of licensing core characters.

The implication is bigger than Disney. It means we can now create Mickey Mouse stories. Donald Duck stories. Entire alternate universes of officially sanctioned fan fiction generated at machine speed. That raises an obvious question: will other brands and IP holders follow suit? Will studios license characters not just to platforms but to people? Will franchises decide it’s better to control the sandbox than pretend it doesn’t exist?

Push it a step further and the question gets more provocative. If Disney can license Mickey Mouse into AI systems, do actors license themselves? Does Robert De Niro authorize a digital version of his voice and face for certain roles, contexts or genres? Does Brad Pitt? Leonardo DiCaprio? Do performers become rights-managed identity assets, negotiated and governed like characters rather than flesh-and-blood talent?

For the time being, I guess that answer is “no”, since the 2023–2024 SAG-AFTRA strikes centered precisely on preventing unlicensed AI use of actor likenesses and voices. But the times they are a change’n.

If Mickey Mouse Needs Proof…

None of this is science fiction anymore. If Mickey Mouse—arguably the most legally protected, culturally embedded character on earth—now needs contractual guardrails to exist inside AI systems, then no brand asset is inherently self-authenticating anymore. Ownership alone no longer confers truth.

By the way, Getty Images reached the same conclusion from the opposite direction, suing AI firms for training on its image library without permission.

Disney isn’t resisting AI. It’s absorbing it, formalizing it and fencing it. It’s treating character generation not as a creative experiment but as a trust and provenance problem.

But if Mickey Mouse needs proof, what exactly are the rest of us relying on?

The Scale of the Trust Collapse

AI already fakes everything that once anchored trust. Faces are cloned. Voices are synthesized. Endorsements are fabricated. Reviews are generated by the thousands. Europol’s 2024 Internet Organised Crime Threat Assessment identifies AI-driven impersonation as one of the fastest-growing digital crime vectors globally. The FBI reported $16.6 billion in cybercrime losses for 2024, a 33% increase from 2023, with AI-assisted fraud cited as a major accelerant. Marketing didn’t break trust, but it is now operating inside the blast radius.

Deepfakes Are Now an Operational Risk

Deepfakes are no longer edge cases. They are operational threats. We now regularly build them into our crisis and issues management plans for our clients. HSBC and other global banks now run AI impersonation simulations as part of executive risk training.

AI-generated videos and audio have already been used to impersonate executives, politicians and celebrities with enough realism to move markets and drain accounts. Meta confirmed in 2024 that it removed thousands of AI-generated celebrity scam ads across Instagram and Facebook using fabricated likenesses. For brands, the risk isn’t hypothetical embarrassment. It’s real reputational exposure at machine speed.

When Identity Becomes the Gatekeeper

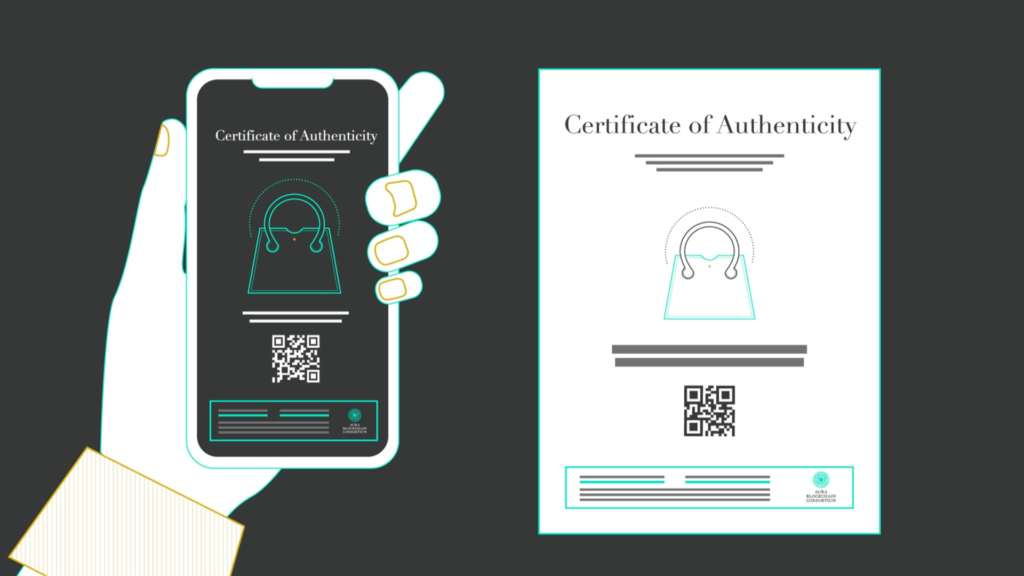

This is where identity and provenance stop being abstract concepts and start behaving like infrastructure. Blockchain-based identity systems introduce something marketing has never had at scale: tamper-proof verification. Forbes reported that decentralized identity pilots are being deployed specifically to combat AI-driven misinformation and fraud. Verification shifts trust from claims to math. LVMH’s Aura blockchain was built for this exact reason: to authenticate luxury goods at the source.

World ID applies this same logic to people, solving the problem of proving a real, unique human exists online without revealing who they are or creating a new surveillance layer. That’s the future we face—not knowing what’s human vs robot generated. Even proving that we are human, may be a challenge.

Bots Are Polluting the Metrics

Bots are already distorting the metrics marketing leadership relies on. An Imperva 2024 report found that 49 percent of all internet traffic is now bot-driven, many powered by generative AI. That means impressions, engagement and even conversions are increasingly polluted by non-human activity. Proof-of-human systems don’t just improve trust. They clean the data marketers make decisions on. X and LinkedIn have both acknowledged ongoing bot mitigation challenges despite AI-based detection. For marketers, that means we are paying to reach audiences that aren’t real.

Proof of Personhood Moves Into Strategy

Proof-of-personhood is moving out of theory and into strategy. MIT Technology Review has identified it as a critical missing layer in an AI-dominated internet. When machines can generate content indistinguishable from human output, the differentiator becomes the ability to verify that a real person was involved at all. Storytelling doesn’t disappear. It gains a provenance layer. OpenAI itself has publicly warned that proof-of-human may be required to protect future AI systems.

Brands Are Quietly Rebuilding Trust

Brands are already adapting, often quietly. Vogue Business reported in 2024 that more than 40 luxury and fashion brands are piloting blockchain-based authentication for products and experiences. These initiatives are not about collectibles. They are about provenance. A digital record that proves origin, ownership and legitimacy in a way screenshots and certificates never could. Nike’s early NFT experiments foreshadowed this shift toward authenticated digital ownership.

Verification Without Surveillance

Privacy is non-negotiable. Verification systems that require full data surrender simply recreate the surveillance economy under a new name. Approaches that rely on cryptographic techniques like zero-knowledge proofs confirm uniqueness without storing personal data. That allows brands, creators and audiences to participate without turning identity into collateral damage. Apple has taken a similar stance with on-device AI processing rather than cloud-based identity exposure.

Your Brand Voice Is Already Being Cloned

Brand voice is no longer safe either. WARC reported in 2024 that over 60 percent of marketers have already encountered AI-generated misuse of their brand voice or identity. Copy that looks right, sounds right and feels right can now be produced instantly by systems trained on scraped data. Intent is invisible. Origin is not. The New York Times has sued OpenAI and Microsoft over unauthorized training on its content.

Marketing Meets Cybersecurity

Identity architecture is converging with enterprise security. Gartner predicts that over 60 percent of enterprises will adopt zero-trust frameworks by 2026, driven largely by AI-enabled impersonation threats. Marketing and cybersecurity are heading toward the same conclusion from opposite directions: continuous verification beats assumed trust. Microsoft has embedded zero-trust identity principles across Azure and enterprise marketing stacks.

Why Disney Drew the Line

Disney’s OpenAI deal makes the point explicit. The company didn’t just license characters. It retained strict approval rights and usage controls over how those characters are generated and deployed. Reuters noted that Disney prioritized verification and governance over volume. Mickey Mouse can exist inside AI systems, but only with an attestation layer that confirms legitimacy.

In practice, that means Mickey Mouse can only be generated inside licensed AI systems, for approved uses and within predefined boundaries, with misuse blocked before it happens rather than litigated after. Disney isn’t enforcing its brand in court—it’s embedding its rules directly into the machines that create the content.

The Only Thing AI Can’t Fake

That is the line that matters. AI can generate brilliance, scale creativity and manufacture authority. What it cannot do on its own is prove origin. Deloitte’s 2024 Trust in Technology study found that 73 percent of consumers say verified authenticity matters more than brand messaging. The market is already voting.

What Brands Must Decide Now

Brands that continue to chase reach without verification will end up with inflated dashboards and hollow credibility. The brands that endure will be the ones that treat identity, provenance and proof as core assets rather than technical details. When AI can fake everything, control shifts to whoever can prove what’s real.